Linear Algebra for AI and ML

Linear algebra is a branch of mathematics that describes linear systems with linear equations and linear functions and deals with their solutions. An equation is linear if it does not have any term in it with exponents greater than one. A system is linear if it satisfies the conditions of superposition and homogeneity. A system of linear equations (or linear system) is a collection of one or more linear equations involving the same variables (wikipedia).

Linear algebra is the study of linear combinations, including vector spaces, lines and planes, and the mappings that are required to perform the linear transformations. It deals with linear equations and their representations in vector spaces and through matrices. It helps to understand their underlying structure of linear systems.

It is

used to model of a large variety of natural phenomena and allows efficient

computation with such models. A

system of linear equations model real world systems with linear

characteristics. They occur when we have two or more linear

equations working together. Such equations are used to solve problems in

various fields such as Economics, Engineering, Physics, Chemistry, Transportation

and Logistics, Investment forecasting and several more. Nonlinear systems are

often dealt by linear algebra with first-order approximations.

- No solutions exists, then the equations are inconsistent.

- Only one solution exits.

- Infinite number of solutions exists.

Finding solutions to 3 variable linear equations means finding the intersections of planes. For 3 variable system of equations there are the following possible cases of solutions.

- All the three planes

intersect at a point. Then there is a unique solution.

- The three planes

intersect or coincide along a line on a plane, in which case there are infinite

solutions.

- No intersection at

all or they intersect in pairs. In this case there is no solution.

Systems that have a single solution are those for which the ordered triple (x,y,z) defines a point that is the intersection of three planes in space. Systems that have an infinite number of solutions are those which, for which the solutions represent a line or coincident plane that serves as the intersection of three planes in space. Systems that have no solution are those represented by three planes with no point or line in common.

Calculus

and Linear Algebra

The term “linear” refers not just to only linear algebraic equations, but also to linear differential equations, both ordinary and partial, linear boundary value problems, linear integral equations, linear iterative systems, linear control systems, and so on.

Linear Algebra and Calculus are two fundamental areas of mathematics that are interconnected in many ways. Calculus deals with functions and their derivatives, while linear algebra involves operations on numbers and variables.

Differential

calculus and LA.

The

derivative of a function at a point is the best linear approximation to the

function near that point. In optimization problems it is often required to find

maxima or minima of functions by setting derivatives equal to zero. Such problems

can be expressed in terms of linear algebra.

L.A. is used

to deal with problems in multivariate calculus, where concepts like gradients,

Jacobians and Hessians which are vectors and matrices of first and second

derivatives respectively. Systems of linear differential equations can be

solved using eigenvalues and eigenvectors, concepts from linear algebra.

Matrix Calculus and Linear Algebra

The

method of doing multivariable calculus is called Matrix Calculus. Matrix

calculus deals with derivatives and integrals of multivariate functions

(functions of multiple variables). It allows us to write the partial

derivatives of such functions as a vector or a matrix that can be treated as a

single entity. This greatly simplifies some common mathematical operations such

as finding the maximum or minimum of a multivariate function. In essence,

Matrix Calculus can be viewed as an extension of Linear Algebra to multivariate

data and functions. It is a fundamental tool for understanding and solving

problems in machine learning and data science. Multivariable calculus called

matrix calculus greatly simplifies finding the maximum and minimum of a

multivariate function and solving linear differential equations.

Integral calculus and LA.

The

concept of linear transformations, a key topic in linear algebra, is also

important in integral calculus. For instance, the Fourier Transform, which is

used extensively in integral calculus, is a type of linear transformation. Numerical

methods for approximating integrals often use concepts from linear algebra. For

example, the method of least squares, which is used to fit a function to data

points, is a problem in both integral calculus and linear algebra. In integral

calculus, functions can be treated as vectors in function spaces.

Linear Algebra the Mathematics of Data

In machine learning and data science application a dataset with real valued variables can contain hundreds of variables in which case manual solutions becomes impossible. Solutions will therefore require usage of large matrices and algorithms implemented with computers.

Linear algebra is a field of mathematics that could be called the mathematics of data. Modern statistics uses both the notation and tools of linear algebra to describe the tools and techniques of statistical methods. As the mathematics of data, linear algebra is therefore essential for understanding the theory behind Machine Learning, especially for Deep Learning.

Linear Least Squares

A vector space is a nonempty set V of vectors on which two operations vector addition and scalar multiplications are defined, if it satisfies the following properties.

- Scaling or scalar multiplication of vectors i.e., f(αv) = αf(v).

- Superposition i.e., for any two vectors v and u, f(v + u) = f(v) + f(u)

Key operations that can be performed with vectors are

- Addition and Subtraction u + v, u – v, etc.

- Convex combinations such as αu + βv, α and β such that α + β =1. Dot product of two vectors u.v is results in a scalar value.

- Computing the angle θ between u, v where cosine(θ) = u.v / (||u||.||v||).

- Transpose of vector u is denoted by uT, the transpose of column vector u is a row vector and vice-versa.

- A row vector is also a 1D array. The inner product of uT.v is a scalar quantity.

- The uT.u is the square of its magnitude.

- The outer product of u.uT is a matrix of n x n dimension which is 2D array.

- Scalar multiplications αU , βV.

- Transpose of n x m matrix U, is UT is an m x n matrix.

- The product of n x m matrix U with a m x n matrix V, is W = UV which is an n x n matrix.

- The product of m x n matrix V with a n x m matrix U, is R= VU which is an m x m matrix.

- If two matrices are of same dimensions the following operations can be performed. Addition and Subtraction U + V, U – V, Element –by – element product U.*V, Element – by – element power U.^V, Element –by – element right division U./V, Element –by – element left division V./U

Terms related to Matrices

Minors of a matrix - The minor of a matrix exists for every element uij of a square matrix. It is the determinant of sub-matrix formed by ignoring the ith row and jth column of U. Since minors of matrix element are determinant values they have no polarity or signs. A matrix of minors is a square matrix of same order of U formed by its minors.

Eigenvalues and Eigenvectors

where U being an orthonormal matrix (i.e.,

UTU = I) and Λ being a diagonal matrix containing the eigenvalues of A.

This factorization can be performed only by for diagonalizable matrices. It is also called spectral decomposition. If one or

more eigenvalues of a matrix are zero then its determinant is zero. In general, a matrix with complex eigenvalues is not diagonalizable.

Consider a 2d vector x = [x,

y]T

and a matrix 2 x 2 matrix A. If the transformation of vector x performed by multiplying it

with A, preserves the direction of x but scales it by a value λ

i.e.,

where U being an orthonormal matrix (i.e., UTU = I) and Λ being a diagonal matrix containing the eigenvalues of A.

Consider a 2d vector x = [x, y]T and a matrix 2 x 2 matrix A. If the transformation of vector x performed by multiplying it with A, preserves the direction of x but scales it by a value λ

Then x is an eigenvector associated with the eigenvalue λ of the matrix A. The following figures illustrates the concepts of eigenvalues and eigenvectors.

Singular Value Decomposition

The SVD method decomposes a matrix M into three matrices: two matrices with the singular vectors and one singular value matrix whose diagonal elements are the singular values. The method factorizes matrix M into a rotation, followed by a rescaling, followed by another rotation. The diagonal entries of the rescaling matrix are uniquely determined by the original matrix and are known as the singular values of the matrix. Singular vectors of a matrix describe the directions of its maximum action. And the corresponding singular values describe the magnitude of that action.

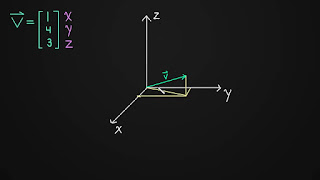

- Scalars are Rank 0 or 0D tensor.

- Vector or Rank 1 or 1-D Tensors.

- Matrices or Rank 2 or 2-D. Tensor.

- 3-D Tensor and Higher Dimensional Tensor – Rank 3 tensors are packs of Rank 2 tensors, Rank 4 tensors are packs of Rank 3 tensors and so on.

Applications in Natural Language Processing

For NLP applications a collection of words called a Bag of Words matrix are numerical representations by arrays. A term-document matrix is also a numerical matrix that describes the frequency of terms that occur in a document. These techniques are useful to store counts and frequencies or words occurring a in document, measure similarity between words and so on such that Document classification, Sentimental analysis, Semantic analysis, Language translation, Language generation, information retrieval, etc can be performed.

Signal information will keep change from time to time. To achieve

temporal stationarity, sequences are sampled into frames of

short duration of few milliseconds. Features extracted from frames that contain

qualitative, temporal, spectral information, etc. Vectors of features

coefficients are extracted from these frames and stacked in sequence to

form n x m matrices to represent the signals.

Large collection speech and audio signal data can then be stored in 3D tensors.

This data can be further used for data analysis and manipulations.

Image Data

Comments

Post a Comment